Python Logging Reference Notes

References

Reference notes on Python Logging

Relevant Links

Notes

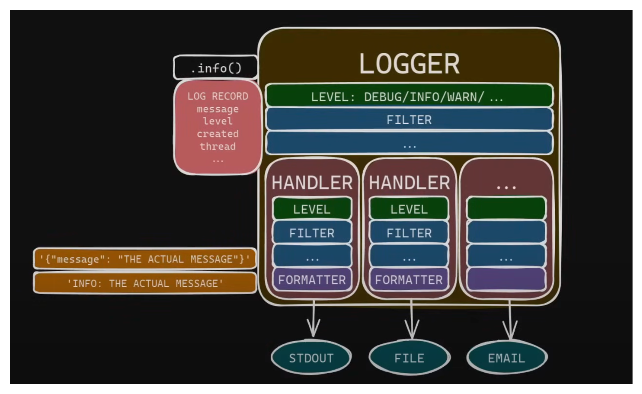

Mental Model for the Python Logger

The log record goes throw the logger top to bottom, going through any filters ( hide sensitive information ) and then gets routed to the handlers one at a time

If the logger drops the log record it’s dropped for good but if one of the handlers drops the record it still gets passed to the next handler

The

LOG RECORDis a python object so each handler has aFORMATTERthat turns the Python Object into actual text. This is where you can customize what the message will look likeOh no mind blown 🤯 this image is only for the root logger and what you see in the image above can be expanded into a tree of loggers access and created by name.

Once the child is done processing a log record it passes it up to its parent.

Only allow handlers, filters on the root logger

- Ensures any messages generated by 3rd party applications get handled and logged the same way by your own application

- Less complex

Don’t use the root logger in your code

- Get your own logger with

logger = logging.getLogger("my_app") - Avoid using

logging.info("users root logger")

Use dictConfig

- Allows you to explicitly list all the necessary components of your logging setup

import logging.config

logger = logging.getLogger("my_app")

# create logging config

logging_config = {

"version": 1,

"disable_existing_loggers": False,

# "filters": {...},

"formatters": {

"simple": {

"format": "%(levelname)s: %(message)s",

},

"detail": {

"format": "[%(levelname)s|%(module)s|L%(lineno)d] %(asctime)s: %(message)s",

"datefmt": "%Y-%m-%dT%H:%M:%S%z" # tip use ISO-8601 Timestamps with timezone

}

},

"handlers": {

"stdout": {

"class": "logging.StreamHandler",

"formatter": "simple",

"stream": "ext://sys.stdout", # ext meaning defined outside this config

},

"stderr": {

"class": "logging.StreamHandler",

"level": "WARNING"

"formatter": "simple",

"stream": "ext://sys.stderr", # ext meaning defined outside this config

},

"file": {

"class": "logging.handlers.RotatingFileHandler", # keeps appending to certain size then creates new file

"level": "DEBUG",

"formatter": "simple",

"filename": "logs/my_app.log",

"maxBytes": 10_000,

"backupCount": 3

},

},

"loggers": {

"root": {"level": "DEBUG", "handlers": ["stdout"]}

}

}

def main():

# specify it here

logging.config.dictConfig(config=logging_config)

logging.addHandler(logging.StreamHandler(...))

logging.debug("debug message")

logging.info("info message")

logging.warning("warning message")

logging.error("error message")

logging.critical("cirtical message")

try:

1 / 0

except ZeroDivisionError:

logger.exception("exception message")

if __name__ == "__main__":

main()Use a json or yaml config file for dictConfig

- json support is built into standard library so while the json file is more verbose you would have to bring in a dependency for the yaml parser

{

"version": 1,

"disable_existing_loggers": false,

# "filters": {...},

"formatters": {

"simple": {

"format": "%(levelname)s: %(message)s",

}

},

"handlers": {

"stdout": {

"class": "logging.StreamHandler",

"formatter": "simple",

"stream": "ext://sys.stdout",

}

},

"loggers": {

"root": {"level": "DEBUG", "handlers": ["stdout"]}

}

}- Load the config file

Store persisted logs in JSON format

- There are none built in so you would have to write your own or

pip install

- This would output jsonl lines so each line would be json formatted

Creating a custom filter

ImportantChallenge

- With the non error filter create a logging config that shows non-errors to stdout and errors to stderr

- filters prevent duplicates

Python Logging Performance is bad

- All log calls are synchronous and blocking

- The slow part is sending it where ever it needs to go

- Use a “QUEUE_HANDLER” which stores the logs in a que without blocking

- A “QUEUE_LISTENER” accepts those messages and passes them off to other handlers in another thread

{

"handlers": {

"queue_handler": {

"class": "logging.handlers.QueueHandler",

"handlers": [

"stderr",

"file"

],

"respect_handler_level": true // defaults to false which would send every message to every handler regardless of log level

}

},

"loggers": {

"root": {"level": "DEBUG", "handlers": ["queue_handler"]} // now the queue_handler is the only handler on the root logger

}

}def setup_logging():

config_file = pathlib.Path("logging_configs/config.json")

with open(config_file) as f_in:

config = json.load(f_in)

logging.config.dictConfig(config)

if queue_handler := logging.getHandlerByName("queue_handler"):

queue_handler.listener.start()

atexit.register(queue_handler.listener.stop)For libraries don’t configure logging

- For application code you know the user

- You can still use logging just don’t configure it with

dictConfig